Level Up your Spring Boot Java Application with GraalVM

Introduction

This lab is for developers looking to understand how to containerise GraalVM Native Image applications.

GraalVM Native Image can compile a Java application ahead of time into a native executable. Only the code that is required at run time is included in the executable, and, therefore, the application will use a fraction of resources required by the JVM, start in milliseconds, and deliver peak performance with no warmup. The native executable can be also packaged into a lightweight container image for faster and more efficient deployment.

In addition, there are Maven and Gradle plugins for Native Image so you can easily build, test, and run Java applications as native executables.

Estimated lab time: 60 minutes

Lab Objectives

In this lab you will:

- Add a basic Spring Boot application to a container image and run it

- Build a native executable from this application, using GraalVM Native Image

- Add a native executable to a container

- Shrink your application container image size with GraalVM Native Image and Distroless solutions

- See how to use the GraalVM Native Build tools, Maven Plugin in particular

- Use GitHub Actions to automate the build of a native executable as part of a CI/CD pipeline

NOTE: If you see the laptop icon in the lab, this means you need to do something such as enter a command. Keep an eye out for it.

# This is where you will need to do somethingSTEP 1: Connect to a Virtual Host

First, connect to a remote host in Oracle Cloud - you will develop your application on an Oracle Cloud compute host.

Your development environment is provided by a remote host: an OCI Compute Instance with Oracle Linux 8, 4 cores, and 48GB of memory. The Luna Labs desktop environment will display before the remote host is ready, which can take up to two minutes. To check if the host is ready,

Double-click the Luna Lab icon on the desktop to open the browser.

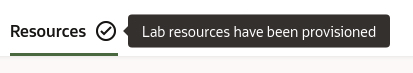

The Resources tab will be displayed. Note that the cog shown next to the Resources title will spin while the compute instance is being provisioned in the cloud. When the instance is provisioned, you will see a checkmark:

A Visual Studio (VS) Code window will open and automatically connect to the VM instance that has been provisioned for you. Click Continue to accept the machine fingerprint.

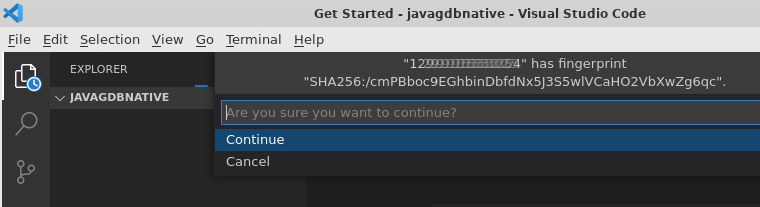

If you don't hit

ContinueVS Code will show you a dialogue, shown below. HitRetryand VS Code will ask you to accept the machine fingerprint. Hit Continue, as in the above step.

Issues With Connecting to the Remote Development Environment

If you encounter any issues with connecting to the remote development environment in VS Code, that are not covered above, we suggest that you try the following:

- Close VS Code

- Copy the Configure Script from the Resources tab and paste it into the Luna Desktop Terminal again

- Repeat the above instructions to connect to the Remote Devleopment environment

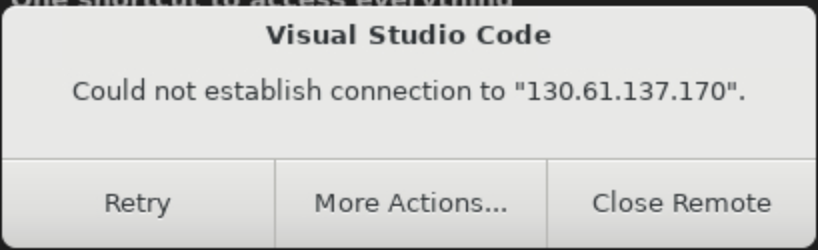

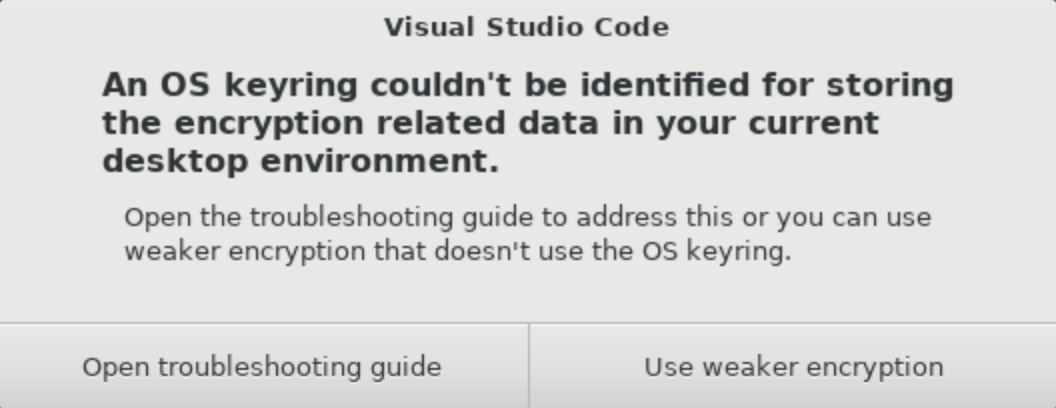

When you see the OS Keyring message for storing the encryption related data not being identified, choose "Use weaker encryption":

You are now successfully connected to a remote host in Oracle Cloud!

Next, open a terminal within VS Code. This terminal will allow you to interact with the remote host. A terminal can be opened in VS Code through the menu: Terminal > New Terminal. Use this terminal in the rest of the lab.

VS Code may prompt you to install plugins and extensions. Ignore/close the notification prompts as this lab doesn't need any plugins and extensions.

Note on the Development Environment

Your development environment comes preconfigured with Oracle GraalVM 25 , as the Java environment for this lab.

You can easily check that by running these commands in the terminal:

java -version

native-image --versionNote: Oracle Cloud Infrastructure (OCI) provides Oracle GraalVM at no cost.

STEP 2: Review the Sample Application

In this step, you are going to compile and package a Java application with a very minimal REST API. Then you will containerise this application using Docker.

The source code and build scripts for this application were provisioned for you and opened in VS Code. Take a look.

Explanation

The application is built with Spring Boot , There are two ways to build a Spring Boot application ahead-of-time:

- Using Cloud Native Buildpacks to generate a lightweight container containing a native executable .

- Using GraalVM Native Build Tools to generate a native executable.

This labs demonstrates the second way.

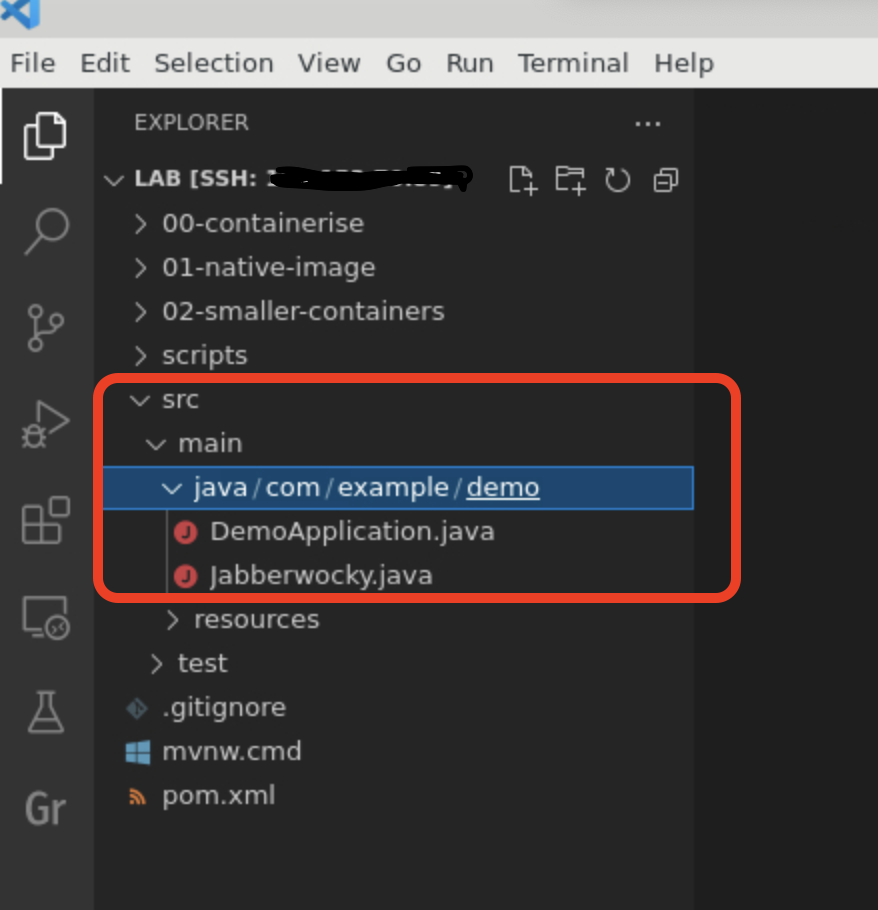

The application has two classes, which can be found in src/main/java:

com.example.demo.DemoApplication: The main Spring Boot class that also defines the HTTP endpoint,/jibber;com.example.demo.Jabberwocky: A utility class that implements the logic of the application.

So, what does the application do? If you call the endpoint REST /jibber, it will return some nonsense verse generated in the style of the Jabberwocky poem , by Lewis Carroll.

The program achieves this by using a Markov Chain to model the original poem (this is essentially a statistical model).

The application ingests the text of the poem, from which it creates a statistical model. The application uses the RiTa library to do the heavy lifting — build and use Markov Chains.

Below are two snippets from the utility class com.example.demo.Jabberwocky that builds the model.

The text variable contains the text of the original poem.

This snippet shows how the model is created and then populated with text.

This is called from the class constructor and defined to be a Singleton

(so only one instance of the class ever gets created).

this.r = new RiMarkov(3);

this.r.addText(text);Here you can see the method to generate new lines of verse from the model, based on the original text.

public String generate() {

String[] lines = this.r.generate(10);

StringBuffer b = new StringBuffer();

for (int i=0; i< lines.length; i++) {

b.append(lines[i]);

b.append("<br/>\n");

}

return b.toString();

}Action

To build the application, you are going to use Maven. The pom.xml file was generated using Spring Initializr and supports using Native Image Build Tools .

Build the application. From the root directory of the repository, run the following commands in your shell:

mvn clean packageThis will generate a runnable JAR file, one that contains all of the application's dependencies and also a correctly configured

MANIFESTfile.Run this JAR file and then "ping" the application's endpoint to see what you get in return — put the command into the background using

&so that you get the prompt back.

java -jar ./target/jibber-0.0.1-SNAPSHOT.jar &Call the end point using the

curlcommand from the command line.When you post the command into your terminal, VS Code may prompt you to open the URL in a browser, just close the dialogue.

Run the following to test the HTTP endpoint:

curl http://localhost:8080/jibberDid you get the some nonsense verse back? So now that you have built a working application, terminate it and move on to containerising it.

Bring the application to the foreground so you can terminate it.

fgEnter

<ctrl-c>to now terminate the application.

<ctrl-c>

STEP 3: Containerise Your Java Application with Docker

Explanation

Containerising a Java application in a container image is straightforward.

You can build a new Docker image based on one that contains a JDK distribution.

So, for this lab you will use a container with the Oracle Linux 9 and NFTC Oracle JDK 25 image: container-registry.oracle.com/java/jdk-no-fee-term:25-oraclelinux9.

The following is a breakdown of the Dockerfile, which describes how to build the Docker image. See the comments to explain the contents.

FROM container-registry.oracle.com/java/jdk-no-fee-term:25-oraclelinux9 # Base Image

ARG JAR_FILE # Pass in the JAR file as an argument to the image build

EXPOSE 8080 # This image will need to expose TCP port 8080, as this is the port on which your app will listen

COPY ${JAR_FILE} app.jar # Copy the JAR file from the `target` directory into the root of the image

ENTRYPOINT ["java"] # Run Java when starting the container

CMD ["-jar","app.jar"] # Pass in the parameters to the Java command that make it load and run your executable JAR fileAction

The Dockerfile to containerise your Java application can be found in the directory, 00-containerise.

To build a Docker image containing your application, run the following commands from your terminal:

docker build -f ./00-containerise/Dockerfile \ --build-arg JAR_FILE=./target/jibber-0.0.1-SNAPSHOT.jar \ -t localhost/jibber:java.01 .Query Docker to look at your newly built image:

docker imagesYou should see a new image listed and the image details. The size of the image in MBs is the last column, which is around 676MB.

Run this image as follows:

docker run --rm -d --name "jibber-java" -p 8080:8080 localhost/jibber:java.01Then call the endpoint as you did before using the

curlcommand:

curl http://localhost:8080/jibberDid you see the nonsense verse?

Now check how long it took your application to startup. You can extract this from the logs, as Spring Boot applications write the time to startup to the logs:

docker logs jibber-javaFor example, the application started up in 1.64s. Here is the extract from the logs:

2025-05-03T07:10:29.461Z INFO 1 --- [main] com.example.demo.DemoApplication : Started DemoApplication in 1.169 secondsNow terminate your container and move on:

docker kill jibber-java

STEP 4: Build a Native Executable

Recap what you have so far: built a Spring Boot application with a HTTP endpoint, and successfully containerised it. Now you will look at how you can create a native executable from your application. This native executable is going to start really fast and use fewer resources than its corresponding Java application.

Explanation

You can use the native-image tool from the GraalVM installation to build a native executable.

But, as you are using Maven already, apply the GraalVM Native Build Tools for Maven , which conveniently enables you to carry on using Maven.

You need to make sure that you are using spring-boot-starter-parent in order to inherit the out-of-the-box native profile and that the org.graalvm.buildtools:native-maven-plugin plugin is used.

You should see the following in the Maven pom.xml file:

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>3.2.0</version>

<relativePath/> <!-- lookup parent from repository -->

</parent><build>

<plugins>

<plugin>

<groupId>org.graalvm.buildtools</groupId>

<artifactId>native-maven-plugin</artifactId>

</plugin>

...

</plugins>

</build>With the out-of-the-box native profile active, you can invoke the native:compile goal to trigger the native-image compilation.

Notice that you can pass additional configuration arguments to the underlying native-image build tool using the <buildArgs> section.

In individual buildArg tags, you can pass parameters exactly the same way as you do from a command line.

In the pom.xml, there is a Maven profile called baseline with an additional build argument -J-Xmx16G to provide more memory to Native Image as shown below:

<profiles>

<profile>

<id>baseline</id>

<build>

<plugins>

<plugin>

<groupId>org.graalvm.buildtools</groupId>

<artifactId>native-maven-plugin</artifactId>

<configuration>

<imageName>${artifactId}</imageName>

<buildArgs combine.children="append">

<!-- Provide more memory to Native Image -->

<buildArg>-J-Xmx16G</buildArg>

</buildArgs>

</configuration>

</plugin>

</plugins>

</build>

</profile>

...

</profiles>Action

Run the Maven build using the profiles (note that profile names are specified with the

-Pflag):

mvn native:compile -Pnative -PbaselineThis will generate a native executable for the platform in the

targetdirectory, calledjibber. It takes approximately 3-4 minutes to generate the native executable.Take a look at the size of the file:

ls -lh target/jibberRun this native executable and test it. Execute the following command in your terminal to run the native executable and put it into the background, using

&:

./target/jibber &Now you have a native executable of the application that starts really fast, in just 0.034 seconds!

Call the endpoint using the

curlcommand:

curl http://localhost:8080/jibberYou should see a nonsense verse in the style of the poem Jabberwocky.

Terminate the application before you move on. Bring the application into the foreground:

fgTerminate it with

<ctrl-c>:

<ctrl-c>

STEP 5: Containerise your Native Executable

Now, since you have a native executable version of your application, and you have seen it working, containerise it.

Explanation

There is a Dockerfile provided for containerizing this native executable: native-image/containerisation/lab/01-native-image/Dockerfile.

The contents are shown below, along with comments to explain each line.

FROM container-registry.oracle.com/os/oraclelinux:9-slim

ARG APP_FILE # Pass in the native executable

EXPOSE 8080 # This image will need to expose TCP port 8080, as this is port your app will listen on

COPY ${APP_FILE} app # Copy the native executable into the root directory and call it "app"

ENTRYPOINT ["/app"] # Just run the native executable :)Action

To build, run the following from your terminal:

docker build -f ./01-native-image/Dockerfile \ --build-arg APP_FILE=./target/jibber \ -t localhost/jibber:native.01 .Run and test it as follows from the terminal:

docker run --rm -d --name "jibber-native" -p 8080:8080 localhost/jibber:native.01Call the endpoint from the terminal using

curl:

curl http://localhost:8080/jibberAgain, you should have seen more nonsense verse in the style of the poem Jabberwocky.

You can take a look at how long the application took to startup by looking at the logs produced by the application as you did earlier. From your terminal, run the following and look for the startup time:

docker logs jibber-nativeYou should see a number similar to 0.032 seconds. That is a big improvement compared to the original of 1.64s!

2025-05-03T07:26:21.226Z INFO 1 --- [ main] com.example.demo.DemoApplication : Started DemoApplication in 0.032 seconds (process running for 0.034)Terminate your container and move onto the next step:

docker kill jibber-nativeBut before you go to the next step, take a look at the size of the container produced:

docker imagesThe container image size is around 199MB. Quite a lot smaller than our original Java container!

STEP 6: Build a Mostly Static Executable and Package it in a Distroless Container

Recap, again, what you have done so far:

- Built a Spring Boot application with a HTTP endpoint,

/jibber - Successfully containerised it

- Built a native executable of your application using the Native Image Build Tools for Maven

- Containerised your native executable

It would be great if you could shrink your container size even further, because smaller containers are quicker to download and start.

Explanation

With GraalVM Native Image you have the ability to statically link system libraries into the native executable.

Then you can package this statically linked native executable directly into an empty Docker image, also known as a scratch container.

For example, Google's Distroless which contains the glibc library, some standard files, and SSL security certificates.

A native executable can link everything except the standard C library, glibc.

We call this a "mostly static executable".

This is an alternative option to staticly linking everything.

You shouldn't produce a fully static executable using

glibc, asglibcwas designed to work as a shared library, but GraalVM Native Image can produce fully static linked executables using themuslC library, Details here .

So, next, build a mostly static executable and then package it into a Distroless container.

In the pom.xml, there is a profile called distroless with an additional build argument --static-nolibc to produce a mostly static native executable as shown below:

<profiles>

...

<profile>

<id>distroless</id>

<build>

<plugins>

<plugin>

<groupId>org.graalvm.buildtools</groupId>

<artifactId>native-maven-plugin</artifactId>

<configuration>

<imageName>${artifactId}-distroless</imageName>

<buildArgs combine.children="append">

<!-- Provide more memory to Native Image -->

<buildArg>-J-Xmx16G</buildArg>

<!-- Mostly static -->

<buildArg>--static-nolibc</buildArg>

</buildArgs>

</configuration>

</plugin>

</plugins>

</build>

</profile>

</profiles>The Dockerfile for this step can be found in the directory native-image/containerisation/lab/02-smaller-containers/Dockerfile.

Take a look at the contents of the Dockerfile, which has comments to explain each line:

FROM gcr.io/distroless/base # The base image, which is Distroless

ARG APP_FILE # Everything else is the same :)

EXPOSE 8080

COPY ${APP_FILE} app

ENTRYPOINT ["/app"]Action

Build your executable as follows:

mvn native:compile -Pnative -PdistrolessThe generated mostly static native executable named

jibber-distrolessis in thetargetdirectory.Now package it into a Distroless container:

docker build -f ./02-smaller-containers/Dockerfile \ --build-arg APP_FILE=./target/jibber-distroless \ -t localhost/jibber:distroless.01 .Take a look at the newly built Distroless image:

docker imagesNow you can run and test it as follows:

docker run --rm -d --name "jibber-distroless" -p 8080:8080 localhost/jibber:distroless.01

curl http://localhost:8080/jibberGreat! How small, or large, is your container?

The size is around 105MB! So you have shrunk the container by 44% (from almost 199 MB). A long way down from your starting size, for the Java container, of 676MB.

Terminate your container and move onto the next step:

docker kill jibber-distroless

STEP 7: Using GraalVM Native Build Tools as Part of Your CI/CD Pipeline

In this part of the lab you will build a native executable using GitHub Actions. (GitHub Actions is a continuous integration and continuous delivery (CI/CD) platform that is built into GitHub.) This means that when you push your code to GitHub, GitHub Actions will build it into a native executable.

You will use the GitHub CLI (gh) to interact with GitHub Actions.

(This has already been installed for you.)

Explanation

In order to complete this step, you must have a GitHub Account. If you don't have one, then signup .

Each GitHub Actions workflow is defined by a single file within your Git repository in the .github/workflows directory. This lab includes a workflow in the file .github/workflows/main.yaml: it uses the GitHub Action for GraalVM . This is the easiest way to install and use the latest version of GraalVM within your workflows.

The contents of the workflow is provided below:

name: GraalVM Spring Boot Demo Github Actions Pipeline (EE)

on: [push, pull_request]

jobs:

build: # <1>

runs-on: ${{ matrix.os }}

strategy:

matrix:

os: [macos-latest, ubuntu-latest]

steps: # <2>

- uses: actions/checkout@v2

- uses: graalvm/setup-graalvm@v1

with:

java-version: '25'

distribution: 'graalvm' # <3>

github-token: ${{ secrets.GITHUB_TOKEN }}

- name: Build and Test Java Code # <3>

run: |

./mvnw --no-transfer-progress native:compile -Pnative -Pbaseline -DskipNativeTests

- name: Archive production artifacts # <4>

uses: actions/upload-artifact@v3

with:

name: native-binaries-${{ matrix.os }}

path: |

target/jibber1 The workflow runs two concurrent builds: macOS and Linux (Ubuntu). This builds a native executable for each of those platforms.

2 The most important step in this workflow is the installation of Oracle GraalVM 25. To install GraalVM Community Edition, pass graalvm-community for a distribution type.

3 Build a native executable.

4 Upload the built executables as artifacts. These are stored against the GitHub Action Workflow run for a period of time and can be downloaded.

Action

Login to your Github account from the terminal, as follows:

gh auth loginYou will be asked a series of questions before you are redirected to a browser where you can log into Github. Use the following responses to the prompts:

- What account do you want to log into? GitHub.com (Default)

- What is your preferred protocol for Git operations? HTTPS (Default)

- Authenticate Git with your GitHub credentials? Y (Default)

- How would you like to authenticate GitHub CLI? Login with a web browser (Default)

Note: The final step of the web browser-based login will ask you for a device code. This is an eight-character code that is generated by the command

gh auth login, and can be copied from the terminal.You should now be successfully authenticated locally and you should be able to use the GitHub CLI to create a repository within your GitHib account.

Push your local code to your GitHub account.

Initialize a Git repository, create a new repository on our GitHub account, and push your local code to it. Use the following commands from the terminal:

git init -b main git add . git commit -m "First commit" gh repo createUse the following responses to the prompts:

- What would you like to do? Push an existing local repository to GitHub

- Path to local repository . (Default)

- Repository name lunalab2025

- Repository owner <your username> (Default)

- Description GraalVM Luna Lab

- Visibility Public (Default)

- Add a remote? Y (Default)

- What should the new remote be called? origin (Default)

- Would you like to push commits from the current branch to "origin"? Y (Default)

View the build in GitHub Actions:

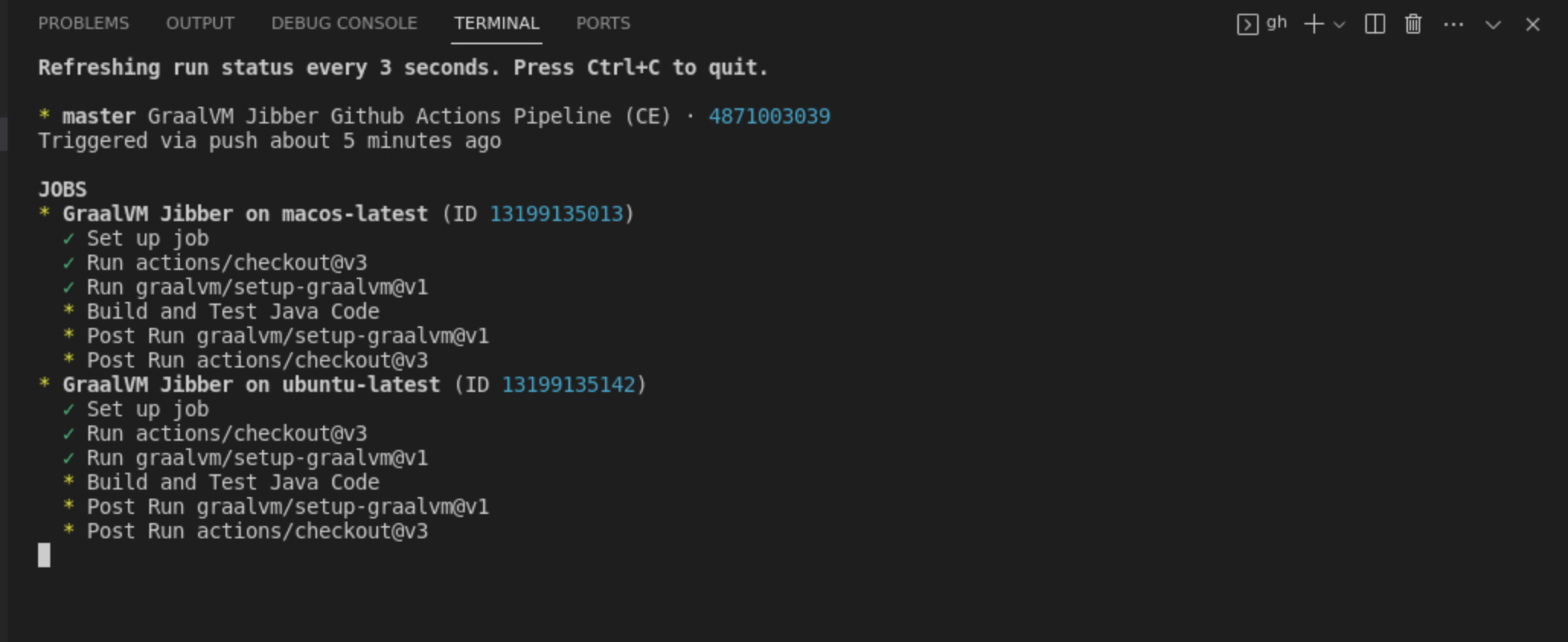

You can monitor the automated build by using the GitHub CLI. From the terminal run the following:

gh run watchSelect the current workflow run. The terminal will then be updated with the status of the GitHub Actions workflow, running remotely on GitHub. This may take a few minutes to complete. The screenshot below shows the status for a workflow run:

Download and run the executable on your laptop - Apple Silicon (ARM) Mac or Intel Ubuntu.

Now the action has run and built the native executables, you can access your repository and download these files.

- From a web browser on your laptop, NOT from within the lab, go to GitHub and login.

- Open your repositories. You should see the repository that you created during the lab.

- Click on the link to view the repository on GitHub.com.

- Open the GitHub Actions for this repository, by clicking Actions.

- Click a workflow run.

- Under Artifacts, download a ZIP file containing the native executable for your platform. (Click the link that corresponds to the name of your platform.)

Now check within your downloads folder (this will vary according to your platform). You should see a file named native-binaries-<platform>-latest.zip. When you uncompress this, you will see a file named jibber.

You should now be able to run this from the command line on your laptop.

Note: You may need to change the permissions of the file before you can run it.

On macOS, you may need to remove the "quarantine" attribute, as follows:

xattr -r -d com.apple.quarantine /path/to/jibber.

Summary of GitHub Actions

- Using the GitHub CLI, you authenticated yourself with GitHub.

- You created a repository within GitHub.

- You pushed your local code to the GitHub repository.

- You watched your action run, using the GitHub CLI.

- You downloaded a native executable to your laptop and ran it.

Conclusion

We hope you enjoyed this lab and learnt a few things along the way. You looked at how you can containerise a Java application. Then, you saw how to use GraalVM Native Image to compile that Java application into a native executable, which starts significantly faster than its Java counterpart. You then containerised the native executable and saw that the size of the container image, with the native executable in it, is much smaller than the corresponding Java container image. Then, you looked at how to build mostly-statically linked native executables with Native Image. Finally, you saw how to use GitHub Actions to automate the build of a native executable as part of a CI/CD pipeline.