Deploy an Internal Load Balancer with Oracle Cloud Native Environment

Introduction

Oracle Cloud Native Environment is a fully integrated suite for developing and managing cloud-native applications. The Kubernetes module is the core module. It deploys and manages containers and automatically installs and configures CRI-O and RunC. CRI-O manages the container runtime for a Kubernetes cluster, which defaults to RunC.

Objectives

In this lab, you will learn:

- Configure the Kubernetes cluster with an internal load balancer to enable high availability

- Configure Oracle Cloud Native Environment on a 5-node cluster

- Verify keepalived failover between the control plane nodes completes successfully

Support Note: Using the internal load balancer is NOT recommended for production deployments. Instead, please use a correctly configured (external) load balancer.

Prerequisites

Minimum of 6 Oracle Linux instances for the Oracle Cloud Native Environment cluster:

- Operator node

- 3 Kubernetes control plane nodes

- 2 Kubernetes worker nodes

Each system should have Oracle Linux installed and configured with:

- An Oracle user account (used during the installation) with sudo access

- Key-based SSH, also known as password-less SSH, between the hosts

- Prerequisites for Oracle Cloud Native Environment

Additional requirements include:

- A virtual IP address for the primary control plane node.

Do not use this IP address on any of the nodes.

The load balancer dynamically sets the IP address to the control plane node assigned as the primary controller.

Note: If you are deploying to Oracle Cloud Infrastructure, your tenancy requires enabling a new feature introduced in OCI: Layer 2 Networking for VLANs within your virtual cloud networks (VCNs). The OCI Layer 2 Networking feature is not generally available, although the free lab environment's tenancy enables this feature.

If you have a use case, please work with your technical team to get your tenancy listed to use this feature.

- A virtual IP address for the primary control plane node.

Deploy Oracle Cloud Native Environment

Note: If running in your own tenancy, read the linux-virt-labs GitHub project README.md and complete the prerequisites before deploying the lab environment.

Open a terminal on the Luna Desktop.

Clone the

linux-virt-labsGitHub project.git clone https://github.com/oracle-devrel/linux-virt-labs.gitChange into the working directory.

cd linux-virt-labs/ocneInstall the required collections.

ansible-galaxy collection install -r requirements.ymlUpdate the Oracle Cloud Native Environment configuration.

cat << EOF | tee instances.yml > /dev/null compute_instances: 1: instance_name: "ocne-operator" type: "operator" 2: instance_name: "ocne-control-01" type: "controlplane" 3: instance_name: "ocne-worker-01" type: "worker" 4: instance_name: "ocne-worker-02" type: "worker" 5: instance_name: "ocne-control-02" type: "controlplane" 6: instance_name: "ocne-control-03" type: "controlplane" EOFDeploy the lab environment.

ansible-playbook create_instance.yml -e localhost_python_interpreter="/usr/bin/python3.6" -e ocne_type=vlan -e use_vlan=true -e "@instances.yml"The free lab environment requires the extra variable

local_python_interpreter, which setsansible_python_interpreterfor plays running on localhost. This variable is needed because the environment installs the RPM package for the Oracle Cloud Infrastructure SDK for Python, located under the python3.6 modules.Important: Wait for the playbook to run successfully and reach the pause task. At this stage of the playbook, the installation of Oracle Cloud Native Environment is complete, and the instances are ready. Take note of the previous play, which prints the public and private IP addresses of the nodes it deploys and any other deployment information needed while running the lab.

Set Firewall Rules on Control Plane Nodes

Open a terminal and connect via SSH to the ocne-operator node.

ssh oracle@<ip_address_of_node>Set the firewall rules and enable the Virtual Router Redundancy Protocol (VRRP) protocol on each control plane node.

for host in ocne-control-01 ocne-control-02 ocne-control-03 do ssh $host "sudo firewall-cmd --zone=public --add-port=6444/tcp --permanent; sudo firewall-cmd --zone=public --add-protocol=vrrp --permanent; sudo firewall-cmd --reload" doneNote: You must complete this step before proceeding to ensure the load balancer process can communicate between the control plane nodes.

Create a Platform CLI Configuration File

Administrators can use a configuration file to simplify creating and managing environments and modules. The configuration file, written in valid YAML syntax, includes all information about the environments and modules to deploy. Using a configuration file saves repeated entries of Platform CLI command options.

Note: When entering more than one control plane node in the myenvironment.yaml file while configuring Oracle Cloud Native Environment, the

olcnectlcommand requires setting the virtual IP address option in the configuration file. In the Kubernetes module section, enter the argumentvirtual-ip: <vrrp-ip-address>.

More information on creating a configuration file is in the documentation at Using a Configuration File .

Create a configuration file.

cat << EOF | tee ~/myenvironment.yaml > /dev/null environments: - environment-name: myenvironment globals: api-server: 127.0.0.1:8091 secret-manager-type: file olcne-ca-path: /etc/olcne/certificates/ca.cert olcne-node-cert-path: /etc/olcne/certificates/node.cert olcne-node-key-path: /etc/olcne/certificates/node.key modules: - module: kubernetes name: mycluster args: container-registry: container-registry.oracle.com/olcne virtual-ip: 10.0.12.111 control-plane-nodes: - 10.0.12.10:8090 - 10.0.12.11:8090 - 10.0.12.12:8090 worker-nodes: - 10.0.12.20:8090 - 10.0.12.21:8090 selinux: enforcing restrict-service-externalip: true restrict-service-externalip-ca-cert: /home/oracle/certificates/ca/ca.cert restrict-service-externalip-tls-cert: /home/oracle/certificates/restrict_external_ip/node.cert restrict-service-externalip-tls-key: /home/oracle/certificates/restrict_external_ip/node.key EOF

Create the Environment and Kubernetes Module

Create the environment.

cd ~ olcnectl environment create --config-file myenvironment.yamlCreate the Kubernetes module.

olcnectl module create --config-file myenvironment.yamlValidate the Kubernetes module.

olcnectl module validate --config-file myenvironment.yamlThere are no validation errors in the free lab environment. The command's output provides the steps required to fix the nodes if there are any errors.

Install the Kubernetes module.

olcnectl module install --config-file myenvironment.yamlThe deployment of Kubernetes to the nodes may take several minutes to complete.

Validate the deployment of the Kubernetes module.

olcnectl module instances --config-file myenvironment.yamlExample Output:

[oracle@ocne-operator ~]$ olcnectl module instances --config-file myenvironment.yaml INSTANCE MODULE STATE 10.0.12.10:8090 node installed 10.0.12.11:8090 node installed 10.0.12.12:8090 node installed 10.0.12.20:8090 node installed 10.0.12.21:8090 node installed mycluster kubernetes installed [oracle@ocne-operator ~]$

Set up kubectl

Set up the

kubectlcommand on the control plane nodes.for host in ocne-control-01 ocne-control-02 ocne-control-03 do ssh $host /bin/bash <<EOF mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config export KUBECONFIG=$HOME/.kube/config echo 'export KUBECONFIG=$HOME/.kube/config' >> $HOME/.bashrc EOF doneRepeating this step across each control plane node is essential due to the potential for a node to go offline. In practice, install the

kubectlutility in a separate node outside of the cluster.Verify that

kubectlworks and that the install completed successfully, with all nodes listed as being in the Ready status.ssh ocne-control-01 kubectl get nodesExample Output:

[oracle@ocne-control-01 ~]$ kubectl get nodes NAME STATUS ROLES AGE VERSION ocne-control-01 Ready control-plane 12m v1.28.3+3.el8 ocne-control-02 Ready control-plane 10m v1.28.3+3.el8 ocne-control-03 Ready control-plane 9m28s v1.28.3+3.el8 ocne-worker-01 Ready <none> 8m36s v1.28.3+3.el8 ocne-worker-02 Ready <none> 8m51s v1.28.3+3.el8

Confirm Failover Between Control Plane Nodes

The Oracle Cloud Native Environment installation with three control plane nodes behind an internal load balancer is complete.

The following steps confirm that the internal load balancer (using keepalived) will detect when the primary control plane node fails and passes control to one of the surviving control plane nodes. Likewise, when the 'missing' node recovers, it can automatically rejoin the cluster.

Locate the Primary Control Plane Node

Determine which control plane node currently holds the virtual IP address.

List each control plane node's network devices and IP addresses.

for host in ocne-control-01 ocne-control-02 ocne-control-03 do ssh $host "echo -e '\n---- $host ----\n'; ip -br a" doneThe

-broption summarizes the IP address information assigned to a device.Look at the results and find which host's output contains the virtual IP associated with the

ens5NIC.In the free lab environment, the virtual IP address used by the

keepaliveddaemon is set to 10.0.12.111.ens5 UP 10.0.12.11/24 10.0.12.111/32 fe80::41f6:2a0d:9a89:13d0/64Example Output:

---- ocne-control-01 ---- lo UNKNOWN 127.0.0.1/8 ::1/128 ens3 UP 10.0.0.150/24 fe80::17ff:fe06:56dd/64 ens5 UP 10.0.12.10/24 fe80::b5ee:51ab:8efd:92de/64 flannel.1 UNKNOWN 10.244.0.0/32 fe80::e80c:9eff:fe27:e0b3/64 ---- ocne-control-02 ---- lo UNKNOWN 127.0.0.1/8 ::1/128 ens3 UP 10.0.0.151/24 fe80::17ff:fe02:f7d0/64 ens5 UP 10.0.12.11/24 fe80::d7b2:5f5b:704b:369/64 flannel.1 UNKNOWN 10.244.1.0/32 fe80::48ae:67ff:fef1:862c/64 ---- ocne-control-03 ---- lo UNKNOWN 127.0.0.1/8 ::1/128 ens3 UP 10.0.0.152/24 fe80::17ff:fe0b:7feb/64 ens5 UP 10.0.12.12/24 10.0.12.111/32 fe80::ba57:2df0:d652:f8b0/64 flannel.1 UNKNOWN 10.244.2.0/32 fe80::10db:c4ff:fee9:2121/64 cni0 UP 10.244.2.1/24 fe80::bc0b:26ff:fe2d:383e/64 veth1814a0d4@if2 UP fe80::ec22:ddff:fe41:1695/64 veth321409fc@if2 UP fe80::485e:c4ff:feec:6914/64The ocne-control-03 node contains the virtual IP address in the example output.

Important: Note of which control plane node currently holds the virtual IP address in your running environment.

(Optional) Confirm which control plane node holds the virtual IP address by querying the

keepalived.servicelogs on each control plane node.for host in ocne-control-01 ocne-control-02 ocne-control-03 do printf "======= $host =======\n\n" ssh $host "sudo journalctl -u keepalived | grep vrrp | grep -i ENTER" doneLook at the last entry in the specific host's output list.

Example Output:

... Aug 10 23:47:26 ocne-control01 Keepalived_vrrp[55605]: (VI_1) Entering MASTER STATE ...This control plane node has the virtual IP address assigned.

Example Output:

... Aug 10 23:54:59 ocne-control02 Keepalived_vrrp[59961]: (VI_1) Entering BACKUP STATE (init) ...This control plane node does not.

Force the keepalived Daemon to Move to a Different Control Plane Node

Double-click the Luna Lab icon on the desktop in the free lab environment and navigate to the Luna Lab tab. Then click on the OCI Console hyperlink. Sign on using the provided User Name and Password values. After logging on, proceed with the following steps:

Start from this screen.

Click on the navigation menu at the top left corner of the page.

Then click on the Compute menu item and the Instances pinned link in the main panel.

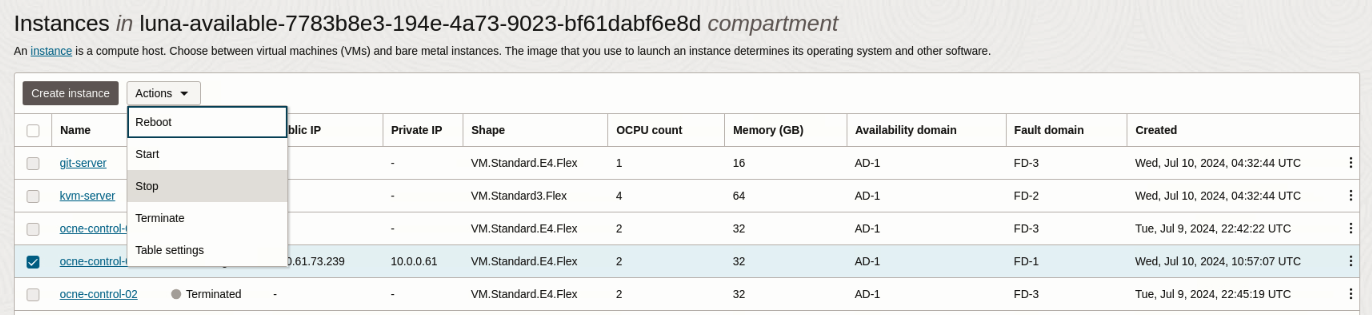

Click the checkbox next to the instance name matching the one that contains the virtual IP address.

Select Stop from the from the Actions drop-down list of values.

Click the Stop button in the Stop instances panel.

Once the instance stops, click the Close button in the Stop instances panel.

Switch from the browser back to the terminal session.

Confirm that the control plane node you just shut down is reporting as NotReady.

Note: You may need to repeat this step several times or notice a timeout until the status changes.

for host in ocne-control-01 ocne-control-02 ocne-control-03 do ssh -o ConnectTimeout=10 $host "echo -e '\n---- $host ----\n'; kubectl get nodes" done-o ConnectTimeout=<time-in-seconds>overrides the TCP timeout and will stop trying to connect to the system if it's reported down or unreachable.

Example Output:

NAME STATUS ROLES AGE VERSION ocne-control-01 NotReady control-plane 51m v1.28.3+3.el8 ocne-control-02 Ready control-plane 50m v1.28.3+3.el8 ocne-control-03 Ready control-plane 49m v1.28.3+3.el8 ocne-worker-01 Ready <none> 48m v1.28.3+3.el8 ocne-worker-02 Ready <none> 48m v1.28.3+3.el8Determine which node has the running

keepaliveddaemon.for host in ocne-control-01 ocne-control-02 ocne-control-03 do ssh -o ConnectTimeout=10 $host "echo -e '\n---- $host ----\n'; ip -br a" doneExample Output:

ssh: connect to host ocne-control-01 port 22: Connection timed out ---- ocne-control-02 ---- lo UNKNOWN 127.0.0.1/8 ::1/128 ens3 UP 10.0.0.56/28 fe80::17ff:fe0e:5b87/64 ens5 UP 10.0.12.11/24 fe80::f3aa:21fd:c0d4:7636/64 flannel.1 UNKNOWN 10.244.2.0/32 fe80::ac89:deff:fee8:b005/64 ---- ocne-control-03 ---- lo UNKNOWN 127.0.0.1/8 ::1/128 ens3 UP 10.0.0.50/28 fe80::17ff:fe20:2db5/64 ens5 UP 10.0.12.12/24 10.0.12.111/32 fe80::729d:a432:4a0f:f922/64 flannel.1 UNKNOWN 10.244.3.0/32 fe80::4460:27ff:fe27:86bf/64The virtual IP is now associated with ocne-control03 per the sample output.

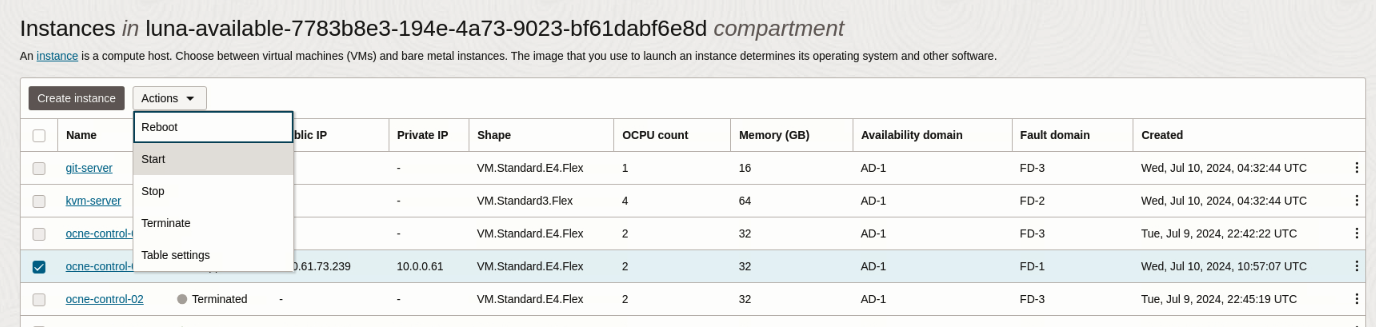

Switch back to the browser.

In the Cloud Console, start the Instance that was previously shut down by clicking the checkbox next to it and then selecting Start from the Actions drop-down list of values.

Click the Start button in the Start instances panel and then the Close button once the instances starts.

Switch back to the terminal session.

Confirm that

kubectlshows all control plane nodes as being Ready.for host in ocne-control-01 ocne-control-02 ocne-control-03 do ssh -o ConnectTimeout=10 $host "echo -e '\n---- $host ----\n'; kubectl get nodes" doneNote: It may be necessary to repeat this step several times until the status changes.

Example Output:

NAME STATUS ROLES AGE VERSION ocne-control-01 Ready control-plane 62m v1.28.3+3.el8 ocne-control-02 Ready control-plane 60m v1.28.3+3.el8 ocne-control-03 Ready control-plane 59m v1.28.3+3.el8 ocne-worker-01 Ready <none> 58m v1.28.3+3.el8 ocne-worker-02 Ready <none> 58m v1.28.3+3.el8Confirm the location of the active

keepaliveddaemon.for host in ocne-control-01 ocne-control-02 ocne-control-03 do ssh -o ConnectTimeout=10 $host "echo -e '\n---- $host ----\n'; ip -br a" doneThe

keepaliveddaemon keeps the virtual IP with the currently active host, despite the original host restarting. This behavior happens because Oracle Cloud Native Environment sets each node's weight equally in thekeepalivedconfiguration file.

Summary

These steps confirm that the Internal Load Balancer based on keepalived has been configured correctly and accepts requests successfully for Oracle Cloud Native Environment.